How to approach batch processing for your data pipelines

11 November 2022 | Noor Khan

Batch processing is a method for taking high-volume repetitive jobs and automating them into a series of steps which cover extraction, aggregating, organising, converting, mapping, and storing data. The process requires minimal human interaction and makes the tasks more efficient to complete, as well as putting less stress on internal systems.

What is a data pipeline?

A Data Pipeline is essentially a set of tools and/or processes which handle the movement and transformation of data through an automated sequence, with data being transferred between a targeted source and a target location.

Find out more: Building data pipelines – a starting guide

Utilising batch processing on your data pipelines can make the data movement, organisation, and structure, more efficient, resource-friendly, and optimised for your business needs, and make larger quantities of data available for processing.

What needs to happen for batch processing?

In order to manage the data effectively, batch processing looks at automating frequent and repetitive tasks and arranges the data by certain parameters, such as – who has submitted the job? Which program it needs to run? Where are the input and output locations? When does this job need to run?

Compared to other tools, batch processing has a much simpler, more streamlined structure, and does not need to be maintained or upgraded as often as others; and because the processing can handle multiple jobs or data streams at a time, it can be used for faster business intelligence and data comparisons.

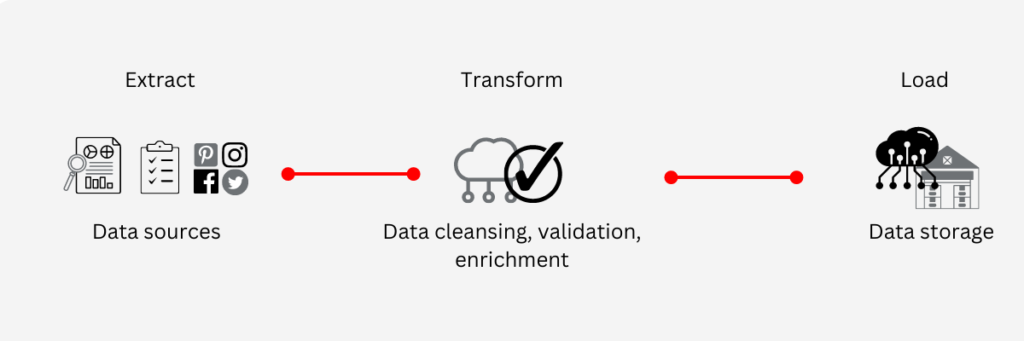

Batch processing with your data pipeline on the cloud

Organisations these days tend to make use of cloud storage systems, to increase their available space, and reduce overhead costs. Batch processing is one of the few areas where there has not been a significant working change in operations – Extract, Load, Transform (ETL) data movement and transformation is still the most popular option and does not look to be disappearing from use any time soon.

The free flow of data along the data pipelines has remained a popular data option for businesses looking to handle increasingly large data requests and requirements.

One of the most significant issues batch processing can raise for companies is debugging the systems, as they can be difficult to manage without a dedicated team, or skilled staff to identify the issues and fix occurring problems. This is a consideration that needs to be contemplated when deciding on what service to use and who will be assisting with the maintenance of the data pipelines.

Batch processing method

There are different methods for processing data, batch processing is only one of them – you may also want to consider stream or micro-batch options; however, this will depend on the complexity of your needs, the size of your business, and the existing platforms or tools that you are using to handle your needs, and your data pipeline.

You will also need to consider whether a real-time data pipeline is a more effective option than running a batch processing, especially if you are dealing with time-sensitive data or information (such as payroll or billing systems that need to be processed on different schedules – such as weekly, fortnightly, or monthly, depending on the time of year).

Find out what you need to consider when looking to build scalable data pipelines

Batch processing for your data pipelines with Ardent

Ardent have worked with a number of clients to deliver a wide variety of data pipeline to suit each client's unique needs and requirements. If you are unsure what type of data processing is best suited to your data and data pipelines, then we can help. Our leading engineers with decades of experience can guide you through the process of establishing your challenges, defining your requirements and suggesting the solution best for you with the recommendation of the most suitable technologies. Get in touch to find out more, or explore our data pipeline development services.

Ardent Insights

Overcoming Data Administration Challenges, and Strategies for Effective Data Management

Businesses face significant challenges to continuously manage and optimise their databases, extract valuable information from them, and then to share and report the insights gained from ongoing analysis of the data. As data continues to grow exponentially, they must address key issues to unlock the full potential of their data asset across the whole business. [...]

Read More... from How to approach batch processing for your data pipelines

Are you considering AI adoption? We summarise our learnings, do’s and don’ts from our engagements with leading clients.

How Ardent can help you prepare your data for AI success Data is at the core of any business striving to adopt AI. It has become the lifeblood of enterprises, powering insights and innovations that drive better decision making and competitive advantages. As the amount of data generated proliferates across many sectors, the allure of [...]

Read More... from How to approach batch processing for your data pipelines

Why the Market Research sector is taking note of Databricks Data Lakehouse.

Overcoming Market Research Challenges For Market Research agencies, Organisations and Brands exploring insights across markets and customers, the traditional research model of bidding for a blend of large-scale qualitative and quantitative data collection processes is losing appeal to a more value-driven, granular, real-time targeted approach to understanding consumer behaviour, more regular insights engagement and more [...]

Read More... from How to approach batch processing for your data pipelines