Success Stories >

Revolutionising the future of Television

Managing and optimising 4 petabytes of client data

22 July 2022 | Noor Khan

Key Challenges

Our client dealing with a growing, high volume of varied, complex data required advanced support and maintenance to avoid loss of data, ensure high uptime and enable data accessibility.Key Details

Service

Managed Services

Technology

Python, Amazon SQS, Amazon S3, Amazon EC2, Amazon Redshift, Razor SWL Tool, Putty, Git, WINSCP

Industry

Technology

Sector

Entertainment

Key results

- Around the clock data support and management

- Near real-time data processing and reporting

- A huge amount of data managed effectively

- High level of error detection and alerting

- Continuous development in line with the evolution of technologies

- Over 7 years of data management

Leading consumer electronics brand

Dealing with huge volumes of data

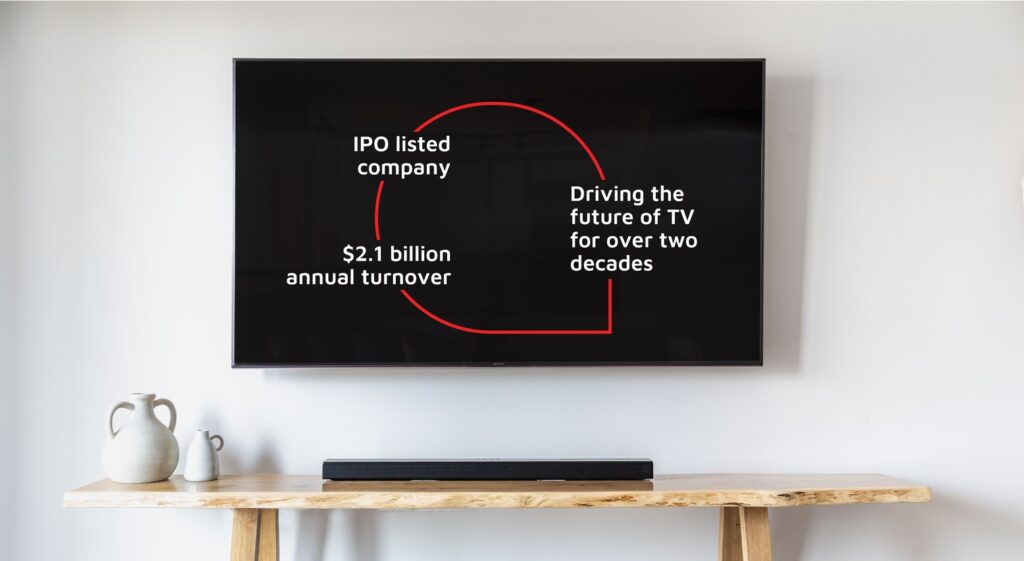

Our client are a US-based, leading consumer electronics brand selling various electronic products such as LCD TVs, soundbars and operating systems for their smart TVs. They have been building innovative technology products for over two decades and are now an IPO listed company with an annual turnover of more than $2.1 billion. With a large consumer audience, our client collects huge volumes of TV data from their audience across the US.

Ensuring data availability, consistency and accuracy

Delivering data quickly and efficiently

Data availability for our client was crucial. They were dealing with vast volumes of varied, complex TV and commercial data. They collected the data from all users that have opted in across the US, and this large amount of data is coming in continuously. It was vital that the data was available for our client’s data science teams, accurate and full without any data drops or gaps.

Read our success story on building a 10 TB data lake

Optimising 4 petabytes of client data

Continuous data streams managed efficiently

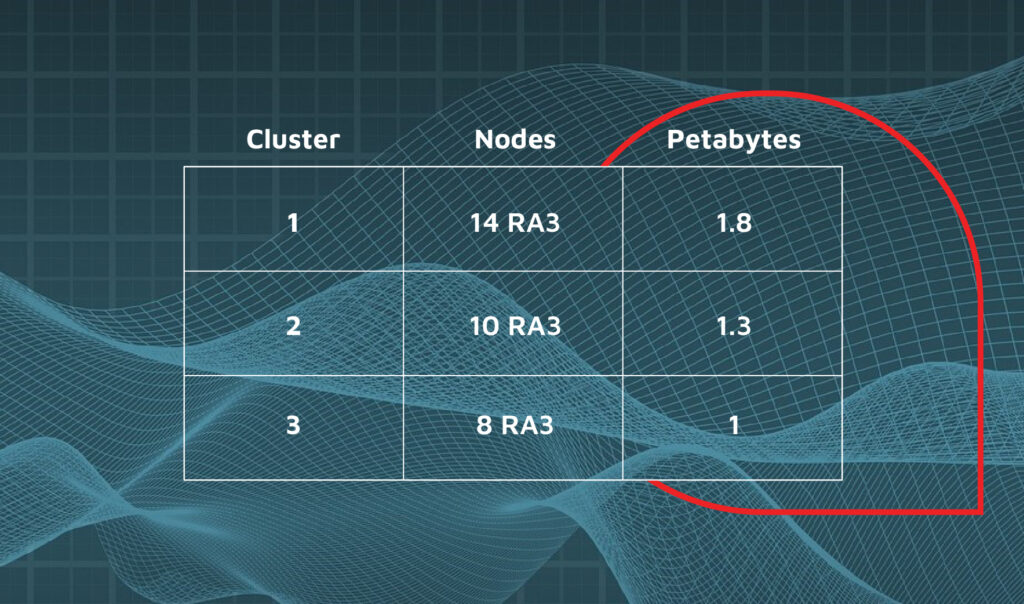

Ardent’s exceptional engineers work around the clock to provide data engineering support to help effectively manage client data. Our data engineering team manage three Redshift clusters on behalf of the client, the first cluster contains the data for the previous month, the second cluster contains the data from the previous year and the third cluster is used for data sharing.

These clusters contain a substantially large amount of data as you can see in the image below. To put this into perspective, an average laptop has storage of around 256GB, so 1PB would equate to the storage of around 3,900 laptops.

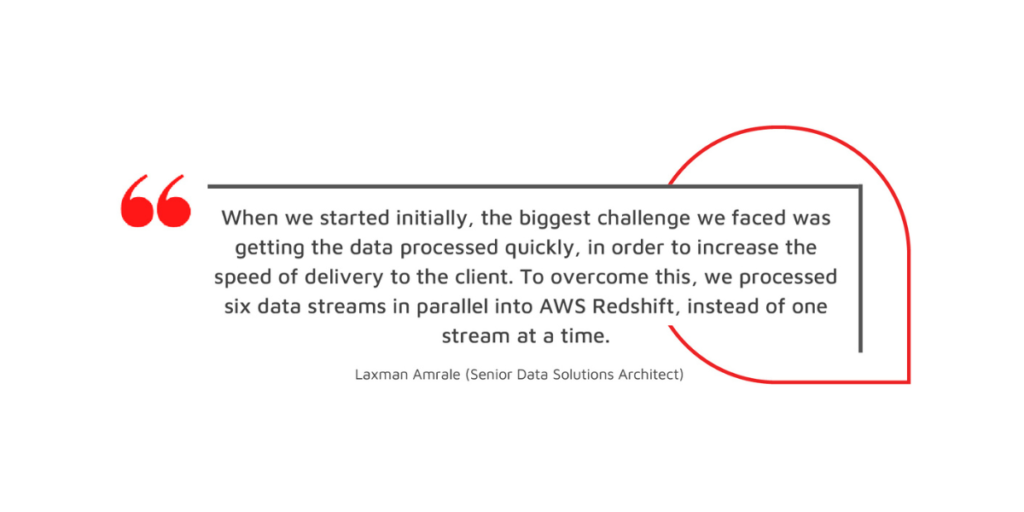

One of the biggest technical challenges that our data engineering teams succeeded on was processing the data and delivering it to the client in near-real-time. Our expert data engineers were able to provide near real-time availability of the data to our client’s data science team by processing data in 6 parallel streams in Redshift, considerably increasing the speed at which the data available to the client.

The data is processed, transformed, loaded, and sent to the client ensuring that its free of duplication and errors and is delivered in near-real-time.

Advanced error detection and alerting

Ensuring data consistency

As part of the solution, our data engineers have the highest error detection and alerting processes in place with PagerDuty to ensure that any issues that arise are dealt with quickly and efficiently. Our teams are consistently monitoring the data around the clock to make certain that any errors that arise are communicated with the relevant teams and resolved immediately. This is to make sure that there is no loss of data which can lead to data gaps.

Progression of technologies

Continuous optimisation

We have been managing our client’s data for over seven years and how we do this has developed over time with the evolution of technologies. With a long-term project, consistent optimisation is key. Therefore, we are currently, we are in the process of moving data processing from Amazon S3 to AWS Firehouse for quicker data ingest and reporting for the client.

Find out about our technology partners.

Actionable commercial insights

Boosting revenue and growth

The Ardent data engineering team have been effectively managing our client’s data for over seven years and continue to innovate and evolve to improve data speed, accuracy and performance. We ensure that the client has peace of mind knowing that their data is in safe hands with Ardent and is managed effectively, securely and in line with industry best practices. Our client are able to use the data collected to gain meaningful insights for commercial benefit, boosting revenue and growth.

Explore our operational monitoring and support services or if you are looking to unlock your data potential get in touch today.

More Success Stories

Success Story

Automating data collection with OCR technology

Market Research | Retail

Accelerating market research by automating data collection with OCR technology. [...]

Success Story

Monetizing broadcasting data

Media | Media

A market leader, internationally renowned media and broadcasting company Founded in 2002, our client has been around for over two decades and is an internationally known company dealing with broadcasting data for commercial use. With a mission of making high-quality technology and content affordable for everyone, they have established themselves as a market leader. [...]

Success Story

Making logistics simple

Logistics | Logistics, Software

Leader logistics software provider Our client is a leading logistics software provider in the UK. With over 3 decades of experience in the industry, they continuously look to innovate with technology. Their range of software products includes a warehouse management system and removal management software. They aim to remove the complexity of software and bring [...]

Ardent Insights

Overcoming Data Administration Challenges, and Strategies for Effective Data Management

Businesses face significant challenges to continuously manage and optimise their databases, extract valuable information from them, and then to share and report the insights gained from ongoing analysis of the data. As data continues to grow exponentially, they must address key issues to unlock the full potential of their data asset across the whole business. [...]

Are you considering AI adoption? We summarise our learnings, do’s and don’ts from our engagements with leading clients.

How Ardent can help you prepare your data for AI success Data is at the core of any business striving to adopt AI. It has become the lifeblood of enterprises, powering insights and innovations that drive better decision making and competitive advantages. As the amount of data generated proliferates across many sectors, the allure of [...]

Why the Market Research sector is taking note of Databricks Data Lakehouse.

Overcoming Market Research Challenges For Market Research agencies, Organisations and Brands exploring insights across markets and customers, the traditional research model of bidding for a blend of large-scale qualitative and quantitative data collection processes is losing appeal to a more value-driven, granular, real-time targeted approach to understanding consumer behaviour, more regular insights engagement and more [...]